Edit 1: still working with MS. I can reproduce the issue following their guide https://learn.microsoft.com/en-us/azure/aks/configure-azure-cni-dynamic-ip-allocation

Edit 2: Microsoft confirmed my suspicions:

We identified a regression with a version of DNC that was pushed out.

We are currently rolling back now, and you should see your CNS pods functionality returning shortly if you have not seen it already.

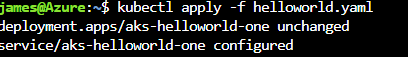

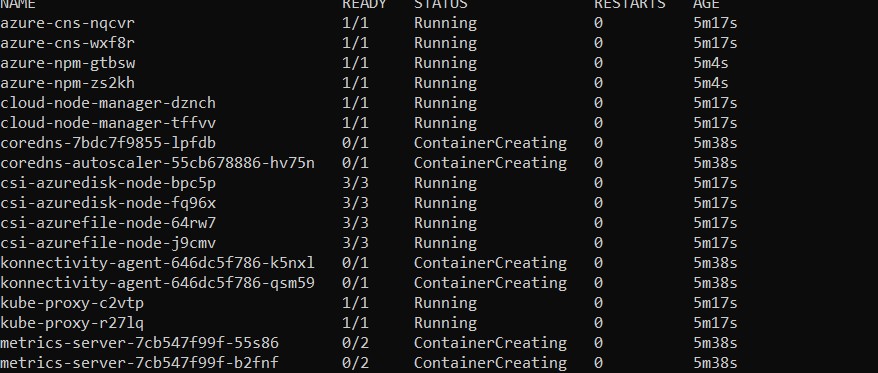

I finished up this past Friday rolling out a new AKS cluster and picked it up this morning. I added a new node pool and started getting errors with 4 specific pods:

I did a kubectl describe pod to see what is going on and it displayed:

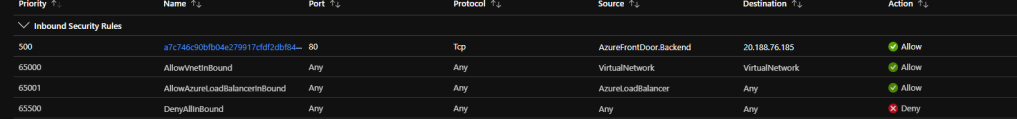

I did change my outbound nat IP and thought it some how screwed something up. I wiped the cluster, reverted the nat change and redeployed, but no dice. I am going bonkers trying to figure out what happened. I reviewed both node and pod subnets to ensure I had enough capacity, which I did. I then went to another subscription and tried creating the same AKS cluster from my IaC, but the same thing happened. I had another user ping me that their cluster was having issues, but it resolved itself. I did look at their logs and it seems Microsoft must of done maintenance on the backend which moved some pods around. All their kube-system pods came back up healthy. So, what is different from their cluster and mine? I am using dynamic IP allocation and the other user is not. Alright, so let me try that. Guess what, it worked. This was all working fine last week, so there is something going on in Azure Government and I am waiting to hear back from Microsoft Support.